A proper Growth Process helps you to run more experiments, get more learnings and get more wins.

My name is Ward van Gasteren and I’ve worked as a Freelance Growth Consultant for the past 9 years with 80+ clients worldwide, including TikTok, Pepsi and Unilever, but also smaller startups like Zigzag, Klearly and StartMail, to help them run better experiments and manage their growth process.

In this article, I’ll show you step-by-step:

– How to keep track of experiments

– How to plan growth experiments

– And share which best practices are useful and which are useless.

And I’ll share some growth experiment templates to save you some time.

Let’s start! 🚀

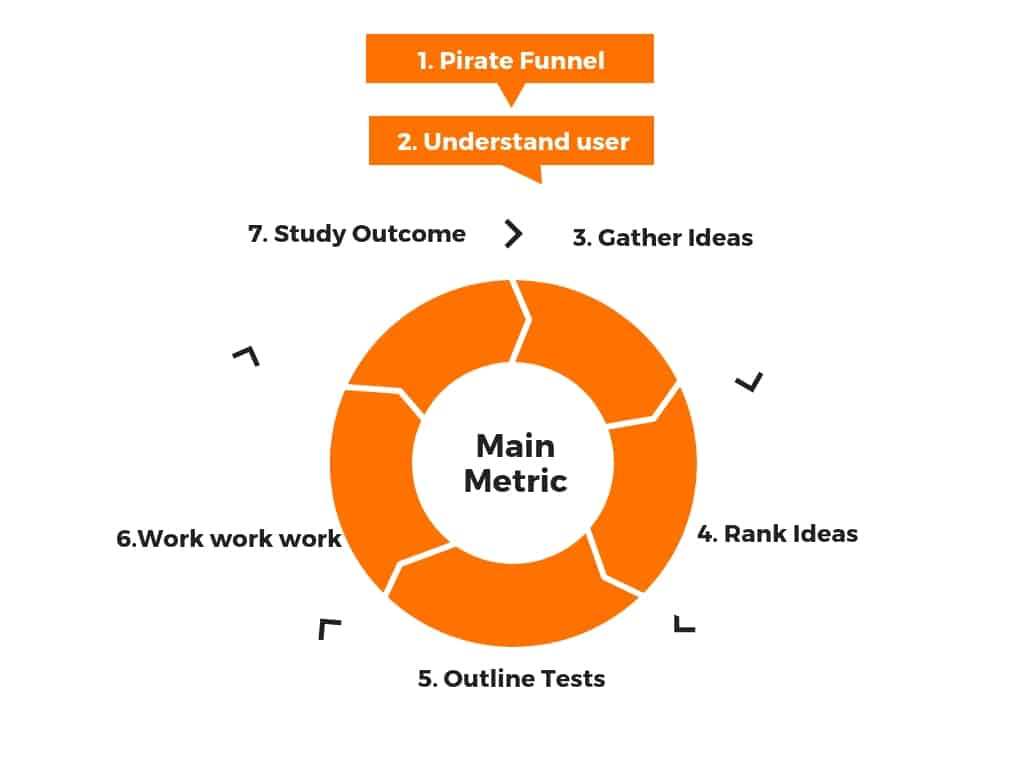

8 Steps of Running Growth Experiments

As you might know, the process consists of two preparation steps plus a cycle of six steps, and I’ll walk you through it step-by-step.

- Picking the right focus / OMTM

- How to find the real bottleneck

- Create a backlog of growth experiments

- Prioritize ideas (We’ll discuss multiple models)

- Plan your growth experiment correctly

- Start working in week-to-week sprints

- How to analyze your experiment results

- Complete Cycle (Iterate, Stakeholders & How to Document Learnings)

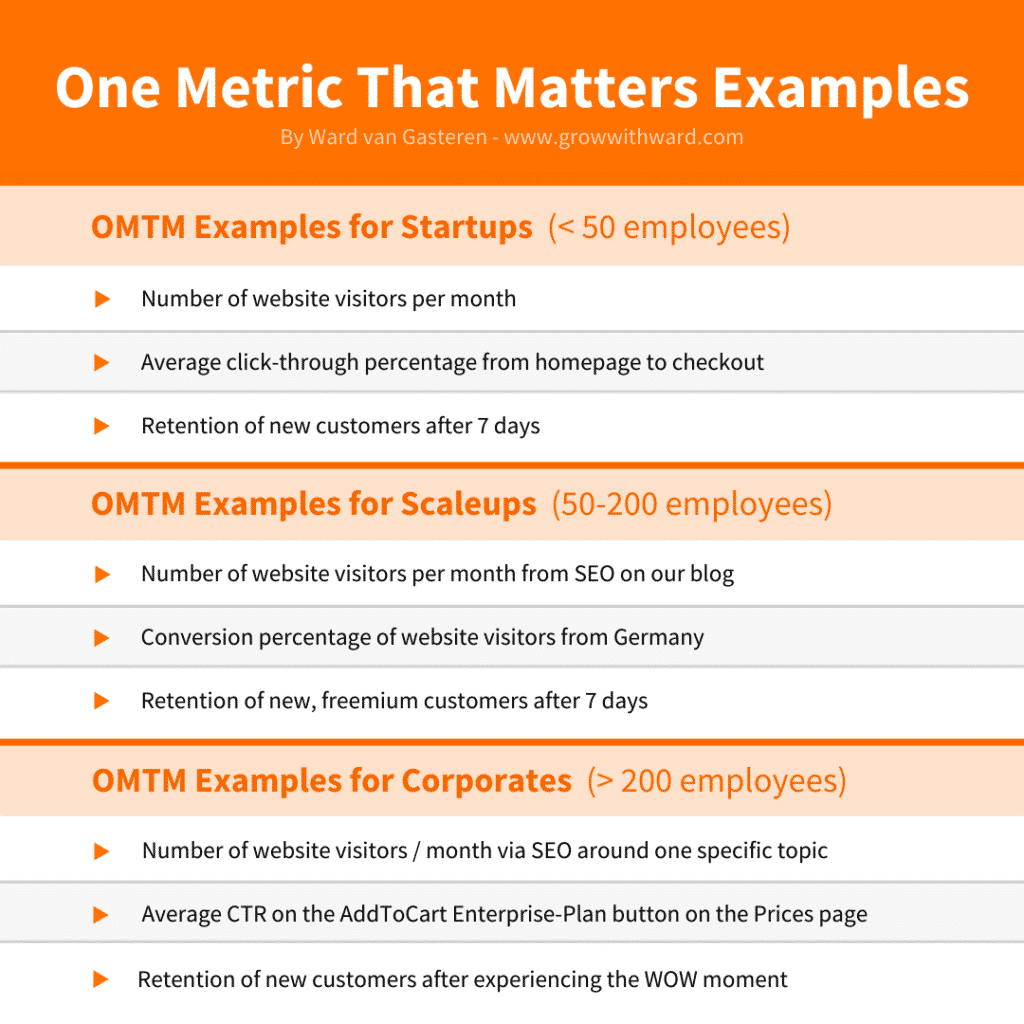

1. How to pick the right focus / OMTM

Before you start on your growth sprint, you as the Head of Growth / Growth Lead need to prepare everything for the team to dive right in.

The main thing here is to define the right target to work on right now. It will depend on the following:

- What is your organisation’s focus at the moment? (Because you want to make an impact where it matters!)

- Where do you see the most room for improvement? Maybe you haven’t touched the retention process at all, so just adding some basic emails/notifications would be very useful!

- What gets your team enthusiastic? Because if they’re not into it, you need push them way more, versus when it’s something they care about as well.

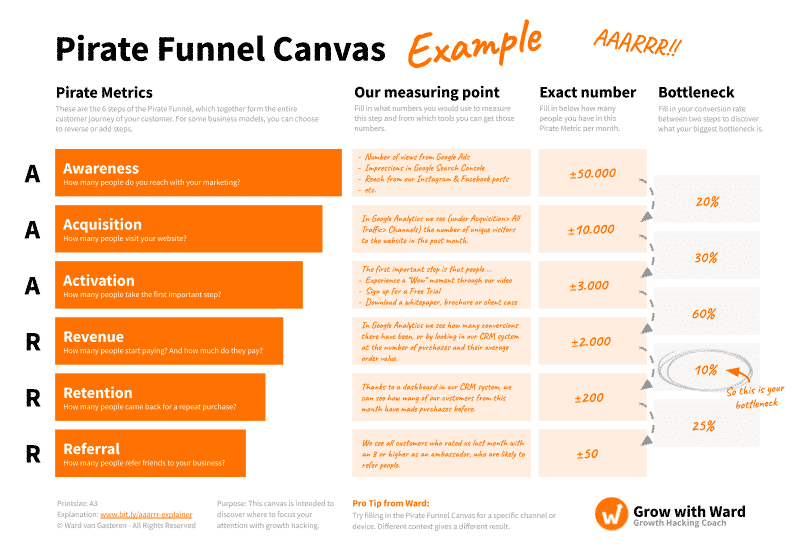

For corporate growth teams, you can go very narrow and focus on a specific part of your audience/ICP, with a specific use case and a specific part of your Pirate Funnel.

For startup growth teams, you want to aim at big swings and probably have a good idea which step of your Pirate Funnel is the biggest bottleneck:

- If your product isn’t retaining users well, focus on your activation/retention first to get to Product/Market-Fit.

- If your retention is going well, but you don’t have a steady stream of new customers, focus on Awareness/Acquisition first to find a steady Growth Channel and a tactic that is repeatable.

- After that, you can start working on revenue and referral.

Great – you’ve picked a problem to work on…

This will now be your One Metrics That Matters (OMTM) for probably the next 2 to 4 months to run experiments on.

But there is one very, very important step, before you dive into brainstorming experiments:

“Why is your problem a problem?”

2. How to find the real bottleneck

If I say that you should improve your Retention, you could probably come up with 100 experiment ideas. But if I’d say that you need to improve your Retention because people forget about us at the start of the next quarter, you have a much better idea of how to hit the nail on its head.

This understanding of what is stopping your users, doubles or triples your experiment impact from my experience.

So before you start brainstorming, understand your bottleneck by looking at the following.

Ways to understand underlying problem to your bottleneck

- Ask Support or Sales what they know about customers regarding your focus

- Watch heatmaps & recordings for relevant pages, to see what is happening.

- Analyze the step-by-step click-through-rates for that step.

- Call some customers to talk you through that step.

- Check forums regarding your issue to understand what frustrates users

This is a nice step to involve your team to hypothesize together what the reason is why people are not converting. This way you get more buy-in from your stakeholders and get the team more involved in the problem, they’re already thinking about solutions and you iron out some of your personal assumptions by looking at it with multiple people.

3. Create a backlog of growth experiments

For Growth Experiments, Quantity definitely leads to Quality

Next up, you want to create a huge backlog of growth ideas to fix the hypothesis from step 2.

How to organize a 1-hour brainstorm / Sprint-Kickoff session with your Growth Team & stakeholders:

- 5 min (or beforehand): Ask everyone to prepare by reading the data

- 15 min (or beforehand): Ask everyone to do some inspiration-research by themselves: look at competitors, look at random other growing companies, read about best practices.

- 10 min: Write down your ideas for yourself only! Aim for 10 per person. It’s not about the quality – the weirder the better, because that’s what can help us to make big swings, think outside the box and make big jumps. By doing it for yourself, you’re avoiding Tunnel Focus and the HIPPO-effect!

- 10 min: Discuss all ideas – everyone just reads out their own ideas (without comments from others!) and everyone can ask questions to elaborate on ideas or add new ideas on the board when they get inspired by others. It’s important to keep it a safe space where everyone dares to be weird and creative, so that you can get the best ideas out, and you can always focus in later on the better ideas without hurting people’s creativity.

- 10 min: Prioritize all ideas – I’ll fully explain this in the next paragraph. To avoid contamination and endless discussions (“Should this be a 3 or 4?”), everyone should score by themselves and you take the average

- 10 min: Agree on top 3-5 experiment that you’ll begin with & assign owners.

- (Optional) 15 min: Plan the chosen experiments with a hypothesis, to-do’s and deadlines – the planning of your experiments I’ll discuss after this.

Common pitfalls when brainstorming experiments:

- Hippo-effect: The Hippo-effect describes the dominance of the Highest-Paid Person’s Opinion (The ‘HiPPO’), where it’s common for a company’s CEO, an agency’s client or a teamboss to dominate a discussion with their ideas, assumptions and worldview, which really limits the rest of the team to feel safe to share all ideas and treated fairly.

To avoid this effect, give everyone their own time to think for themselves and encourage everyone to share their thoughts and ideas. Remember, diversity of thought is the key to getting the most innovative and disruptive ideas that could lead to successful growth experiments. So, be open to all ideas and evaluate them fairly, through a fair prioritization model as we discuss in the next chapter.. - Tunnel focus: The tunnel effect (also known as groupthink) happens when a group of people gets sucked into thinking about one idea, often because it was the first one shared or because of peer pressure to agree with everyone else.

This often limits the diversity of opportunities thought of by the group. This is why I let people write down their own ideas without discussion, so that everyone thinks in their own direction first and then give every idea the chance to be mentioned.

This way you get more creative ideas and uncover different directions, because sometimes (or actually most times…) you’ll find that the best solution isn’t the first one you thought of. - Forgetting the real cause. Perhaps the biggest pitfall is the ‘Solution-Bias’, where people dive straight into naming solutions, without fully understanding the underlying problem of the goal that you’re working on. This way you can come up with many many solutions, but you probably won’t really hit the nail on the head.

For example, let’s say you’re trying to improve the conversion rate on your website, but you haven’t fully understood the root causes of why visitors are leaving without making a purchase. If you immediately jump into generating solutions, you might come up with ideas like changing the layout, adding more images, or running a discount promotion.

However, these solutions may not actually address the root causes of the problem. Perhaps visitors are leaving because they don’t trust your brand or they’re confused about your pricing. By taking the time to fully understand the problem, you can identify these root causes and generate solutions that will actually address them.

This way you can be sure to hit your goal straight at the core with the most relevant growth ideas that are most likely to have a real impact.

4. Prioritize ideas (Most common to overcomplicate)

Basically you want to know which experiment is the best to start with. For some that’s the quickest win (so small ideas first), but what if all your big growth wins are just a bit more complicated?

I feel that prioritization is just to split the best ideas from the worst ideas.

It’s not about 1 vs 2 vs 3. It’s about the top 5 versus the rest. You just need to know which to start with and which to save for later.

Because the scoring is subjective (Everyone just guesses if the Potential is a 6 or a 7…) and if you all agree that your second-highest scoring experiment is the best one to start with for whatever reason, you should just start with that one.

And be open to adjusting your scoring variables, since it might be that your scoring model makes it so that you only go for long-hanging fruit and never start on bigger ideas like Growth Loops.

I usually use ICE scoring, because I find it easiest/fastest to score, because the score names are easy to understand, anyone on the team can answer the questions and it’s relatively short, but just to give you a complete overview…

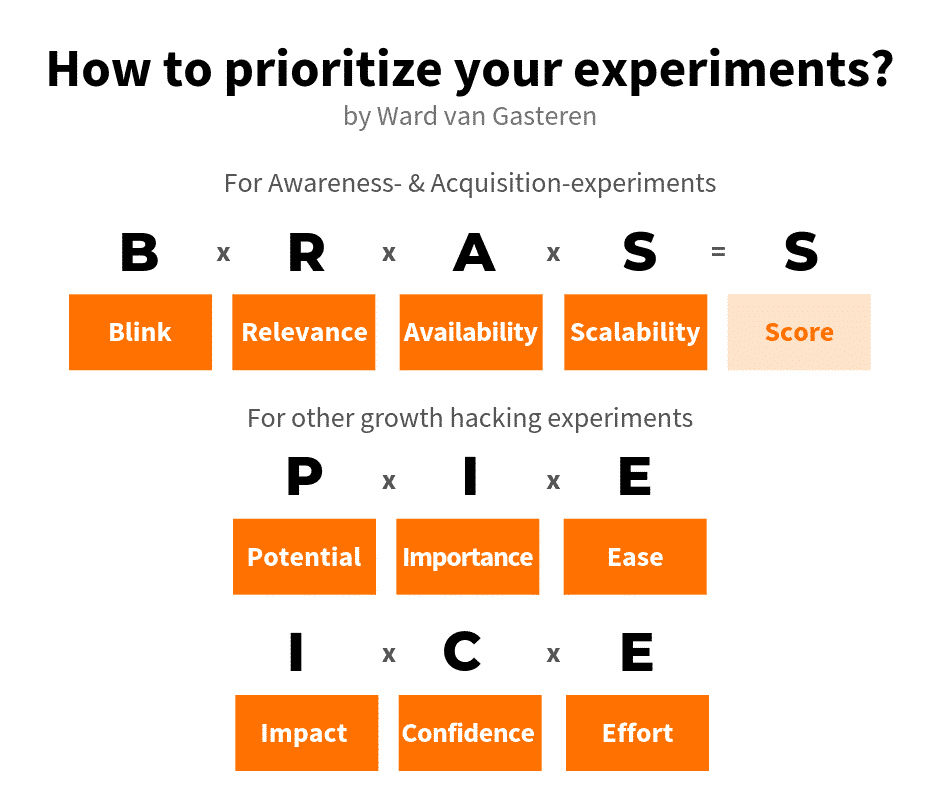

The most common models to score your experiment ideas:

- ICE (Impact, Confidence, Ease): Impact = How big would the impact be if this experiment is successful? Confidence = How sure are we that this idea would work/how much supporting evidence do we have, like survey responses, heatmaps or best practices? Ease = How easy is this idea to execute (considering the needed resources, skills and technical challenges)?

- PIE (Potential, Importance, Effort): Basically the same as ICE, but different order and different wording. So check what language is most common in your organization, so that it blends in smoothly. ‘Importance’ goes more in-depth on how on-point the experiment is with the set goal.

- BRASS by Growth Tribe (Blink, Relevancy, Availability, Scalability): This model is especially useful when scoring marketing ideas. It works as follows: Blink = Based on gut feeling, how well will this work? Relevancy = How relevant is this idea to our audience and product (e.g. Is our audience on TikTok)? Availability = Is this channel available to us based on our budget, skills and tech? Scalability = If this is successful, will we be able to scale this bigger easily?

- RICE (Reach, Impact, Confidence, Effort): Again, very similar to ICE and PIE. ‘Reach’ is nice if you’d be running experiments in different places (different pages on your site, or on different channels) to compare them.

- PXL by CXL is a very different scoring models, where an ideas can collect points based on yes-or-no questions, like “Will more than 1000 people see this experiment?”, “Do we have supporting evidence that this will work?” or “Is the change instantly noticeable?”. For every ‘yes’ the idea gets 1 extra point, and the idea with the most points is scored highest. It’s a bit more work, since there are many more questions, but it’s nice that it takes some subjectivity out of scoring.

Anyway, I think the scoring model is just a supporting element, so don’t spend too much time on discussing which one to choose and just pick one and adjust it on the go when needed. Try to keep it simple, since you probably have to score a lot of ideas after each brainstorm.

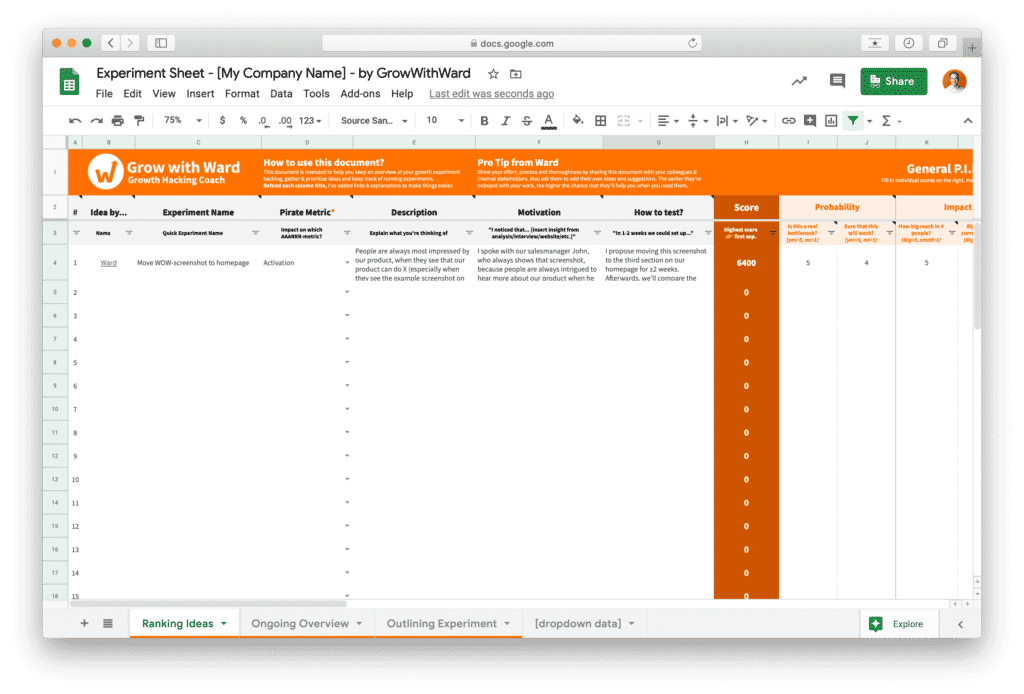

Now that you’ve found your first growth experiment(s) to start with, let’s see how to best document growth experiments.

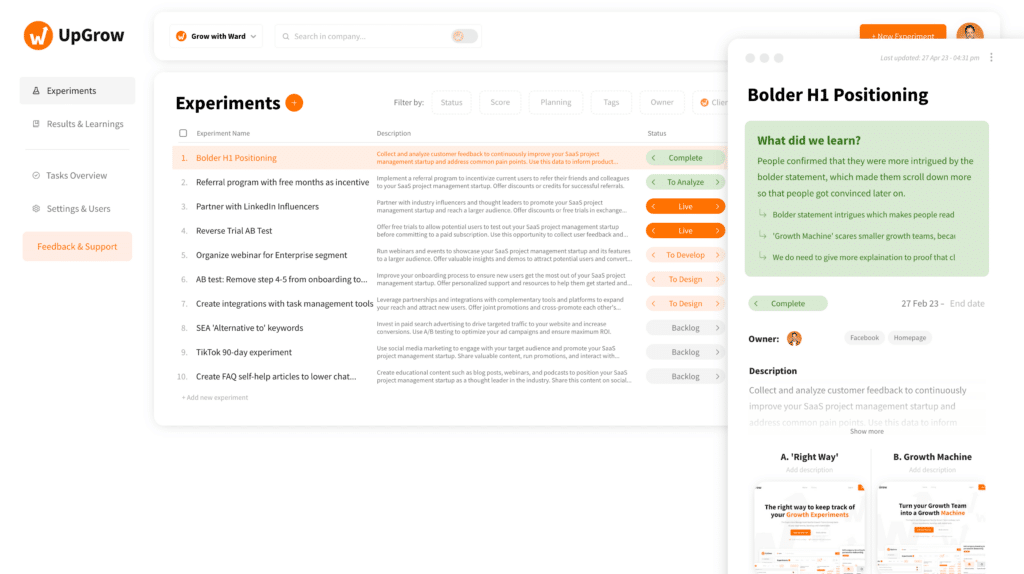

5. How to plan your growth experiment

Keep in mind that the main purpose of documenting your growth experiment, is that you can hold yourself accountable during analysis and so that future you and future colleagues know what has been tested, and afterwards what you’ve learned from it, so pick a tool that is easily searchable and understandable for your whole team: Hiding learning in separate documents makes this hard, so make sure that you have one central place for your experiments.

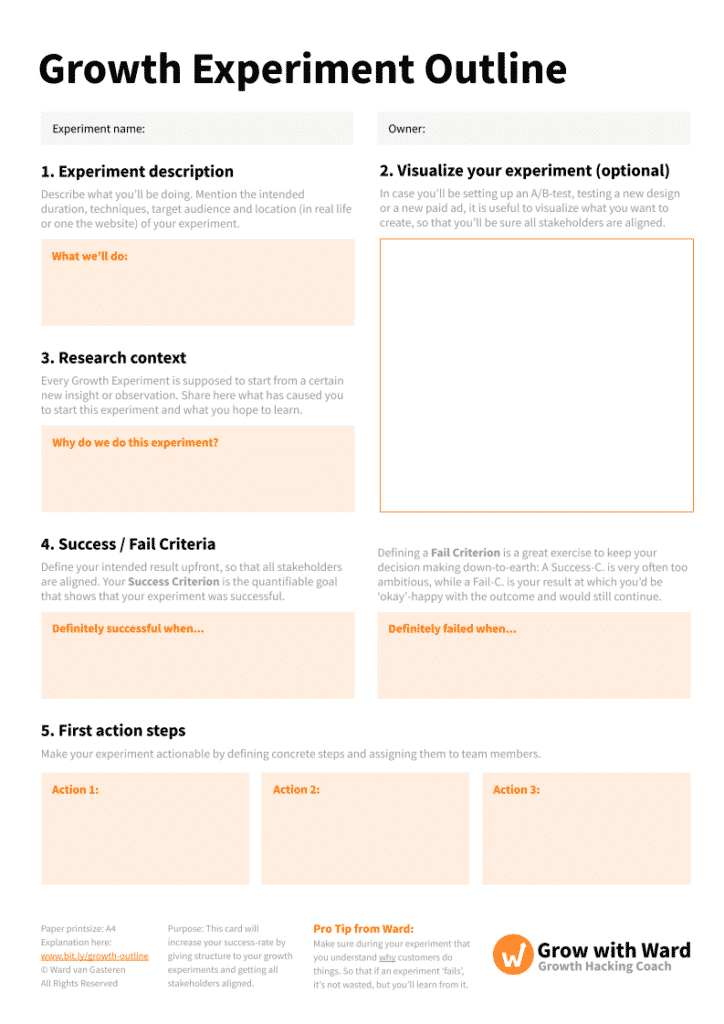

These are the six things to document per Growth Experiment for sure:

1. Name + Description

Clearly describe what you test so that everyone understands it. Keep it short. Describe specifics. Look at it through the eyes of an outsider. Some basic questions:

- What will we change where and how?

- What is our reasoning/what led to this experiment?

2. Hypothesis

Your hypothesis is your ‘Current Knowledge Statement’. I use the following statement, but there are others as well:

“Based on … [Observation/Data],

We add/remove/change … [Action],

Which leads to … [Quantified Result],

Because … [Customer Reasoning]”

It needs to describe what you currently belief will happen, why that will happen and how that will be measured.

When the results are not exactly what you expect: Congrats! You’ve learned something that changes your thinking about your audience/business/industry. In the last step, I’ll show you the 4 ways how you can capitalize on these learnings.

3. (AB-Test) Variants

Not every experiment has to be an AB test, but you should always document clearly what you’re putting out there, what the differences are between the variants and make it visual!

The quote ‘an image is worth a thousand words’ is soooo true for experiments, but especially for AB tests: Either you don’t write it down clearly enough, or you write down too much and people won’t read it. Just add some screenshots to it.

4. Metrics to Track, and Targets

You’re not testing a tactic, you’re testing a story. A story that consists of beliefs, documented in your hypothesis.

The correctness of that story can’t be measured in just one metric. It always consists of multiple metrics and every metric needs to be in line for the whole story to be true.

For example: If you test a new title on your landingpage, and as expected your bounce rate goes down and conversion rate goes up, but your revenue from that page goes down or stays flat, then it means that your story is not fully correct.

Maybe you thought it would all work, because that’s what user interviews showed you, but maybe you only spoke to a certain part of your audience.

This is actually a great learning, because now you know that the new version works better for the interviewed audience, but the original worked better for the rest. If you can understand why by talking to those other users, you might be able to create two landingpages that work best for each audience and you can translate that insight to ads, emails, other pages, etc.

Set targets as a Success/Fail Criteria

Of course, when you run experiments, you should set goals for yourself. I always recommend to set a success and a fail criterium.

The Success criterium is easy: it’s a goal to strive for, just as you normally set goals ambitiously. It’s the number that when you reach it, you’re super happy.

The Fail criterium is an extra challenge to make sure that you stay honest to yourself: it’s the number below which your experiment is definitely failed.

Why is this useful? Take this example: You’re running a new campaign and set the goal at 1000 leads. At 1000 or above, it’s clear that it was a success, but what do you do when you hit 990 leads? It’s still good right… So probably you still decide to go through with it. But what at 900? Or 800? Where is the bottom, below which you accept that it was failed. Quantify this beforehand so that when you get to the analysis you can’t lie yourself into an extra win.

Important Note on ‘Failing’

Most of the time the best learnings come from when you’re honest about a failed experiment, because apparently you, your colleagues and probably also your competitors (if they did this on their website/in their marketing as well), thought that this experiment would be a great idea for the audience, but apparently your actual customers don’t like this that much. This is a great learning of what you shouldn’t waste money on and a sign that you should continue in the other direction.

5. Planning Details:

5a. Experiment Owner

Assign one person as the main responsible person to lead this experiment: to call the shots, to talk to stakeholders and chase team members to do their job.

5b. Tasks & Subtasks

Break the experiment down into actionable tasks.

5c. Start & End Date

When do you plan to start the experiment and when should you have enough data to conclude the experiment. This exercise is also a great sanity-check to make sure that you’re going to launch a test on a place where you’ll get enough data to draw conclusions from. You can use this pre-test signficiance calculator to see how much traffic you would need if you would go for a statistically significant result.

To me, it doesn’t have to be significant, because business isn’t science, but we also need to be sure ahead of time that we aren’t waisting resources.

5d. Visuals & Attachments

Again, add some screenshots from your variants or exports of your data analysis leading up to the experiment, so that future colleagues can read what you did and build forward on your work.

6. Start working in week-to-week sprints

The execution should be the easiest part of this process. There are countless growth marketing tools to save yourself some time.

A time management tip from me: The execution of an experiment should stay under 2 full workdays. If you’re making it bigger than that, you’re probably making it too complicated or too perfect. When planning your experiment, you should assume that this experiment will be a total fail so that you are more aware of how much time you put into it. If you’re doing it well, about 50% of your experiments should be correct to your predictions. The other 50% should be there to show you new learnings on risky bets.

Your job as Head of Growth / Growth Lead

- Task Management

Break the experiments down in easy to start tasks, assign them and define them together so that people know what to do. After that it’s just about checking in, if they’re on track to get it done, if everyone understood the experiment correctly and if nobody is being too perfectionistic. - Stakeholder Management

Now that the experiment is going into preparation, this is a good time to check-in with:- Stakeholders who need to know about the experiment when it goes live. For example, Product/Development should not be surprised of a new element on the page and Support/Sales don’t want to get a call from a customer that they can’t answer.

- Your boss/client, who is financing this project, to show them where the resources are going to.

- Others who might be interested in the learnings from your experiment. As an exercise, I always ask myself beforehand: “What could department X learn from this experiment if it wins/fails?”. For example, your marketing team could improve their email subject lines, Product can take it into account for their roadmap planning, and Sales might want to dive deeper into the topic of your experiment to better understand the customer’s interest. And how great would it be if they can already think about your topic, before the results come back: together you might come to even better conclusions/actions.

- Oversee the pipeline / experiment velocity

The easiest explanation of Growth Hacking might be: More Experiments = More Growth. Obviously, I’m cutting some corners there (good experiment planning, proper analysis and drawing strong learnings from it), but next to that it comes down to how many useful experiments you can run every month.

If you run double the experiments, you can get double as many wins and double your impact on the organisation. This is all a product of how many insights you can collect that lead to experiments, how many experiments you can set up, how many you can run at the same it and how many you can analyze and act on. It’s a funnel! 🙌 Haha no, but for real: keep an eye on what part of your process gives the biggest bottleneck and set yourself goals as team lead to run more experiments each month by removing bottleneck or planning more strategically.

7. How to analyze your experiment results

Analyzing your experiment results can be as complicated as you want. Simply, you look at the results based on your metrics to track and the goals you set. From there you can go three ways, but they all end up on the same place: What did we learn and what are we going to do with it?

Tip: Look at different segments, since sometimes your experiment did work well in one audience, but that result doesn’t show on the total audience.

🟢 Win – Numbers are conclusive & positive

Great – we’ve learned that (some) people really love this: Let’s implement this & see how we can do more of this here or in other places. So for example: If leaning into a certain angle, like ‘We’re cheaper than the competition’ works very well, then:

- How can we emphasise that even more in a new experiment?

- How can we communicate this USP on other channels?

- How can we show our price-benefit in other places?

- What other experiments do we have on the backlog that become more likely to succeed now that we know this?

🟡 Flat – Numbers are inconclusive, not positive or negative

Not bad, but not ideal: If you’ve tested re-wording the button CTA three times and it keeps showing no result, then you can only conclude that you’re working on something that your audience doesn’t care about enough to make them behave differently. From here on:

- Have you tested this multiple times in different ways? Then move on and note down that this is not an interesting type of experiment to run for now.

- If you still believe in this, test it bigger/bolder. Maybe your change was just too small, so try going in the different direction: What if we change the whole page to emphasize this?

- Look at your backlog which experiments should be scored lower as this area doesn’t seem to have the wanted impact.

🔴 Fail – Numbers are conclusive & negative impact

Best! Because apparently everyone in your team believed this was the right way (since you’ve scored this experiment so highly beforehand), but it turns out that the opposite was true. You’ve saved your company a lot of money potentially by not just implementing this without testing.

Probably your biggest fails, become the biggest wins, because it shows that the current state is very much in the right direction. “Do more of this” they say. Opposed to the direction you and probably your competition were going. From here:

- Lean more into the original. If testimonials from corporates didn’t work, since you serve startups, then implement more startup quotes instead, emphasize your startup-use cases more specifically or emphasize ‘for startups’ in your headings.

- Did we make this change somewhere else and can we revert it?

- How does this impact the scoring of current experiments? Have you scored other ideas high or low, because of your original assumption? Take some time to go through the scoring of relevant ideas to adjust it where the potential has now changed.

The second part of Analyzing Experiments: Learnings & Insights

Here we dive into all the qualitative things you’ve learned from this experiment: What is our conclusion about user behaviour? What kind of comments did we get from customers during interviews or under posts on social media? Were there certain audiences that reacted differently to the experiment? What are all the things we noticed on screen recordings or heatmaps? Every insight can lead to new or better experiments, and you won’t remember all the small details in a year from now.

And of course, writing down your learnings, makes sure that your team won’t have to re-run this experiment in the future, because they don’t know that it was ever done or that they can’t find the needed learnings.

8. Complete cycle

So as mentioned it’s a cycle, but I see most growth teams move on to the next experiment on the backlog as a waterfall planning, but instead try to turn your growth process into a flywheel for yourself, so that every time you run an experiment, the next sprint/experiment will be more effective and that you actually build on top of the experiments before.

Iterate ♻️

Instead of going for the next experiment on your backlog, always try to keep space on your next sprint for an iteration on a previous experiment. Now the knowledge is still fresh and you’re building momentum inside your team and organisation.

Create new ideas 💡

At the end of the experiment, take some time to think about new places where you can add the winning variant or new ideas that sprung out of the insights from this experiment.

Share learnings with stakeholders 🗣️

Ask yourself per department, how can I translate the learnings from this experiment in different places. Maybe Sales can apply this during calls? Or Design can take this into account the next time they work on things related to this? Or can we look into new partnerships based on these insights?

Implement/Systematize 📂

Lastly, if something was successful move it to development/product to implement the successful variant. If it’s more of a marketing tactic, you should think about creating a playbook regarding this tactic, so that you can make it repeatable, and discuss it with the marketing team on the best way to apply this tactic systematically. If you’re done testing if it works, you can also double down on it by handing it over to a specialist who can get the most out of this tactic.

Last tip: document, document, document – this is your internal long-term flywheel.

If you store all the learnings from this year, your team can be so much more effective and you can go much more in-depth when the obvious ideas are gone. Also, when you or another teammate leaves the team doesn’t fall behind, and new teammates can instantly hit the ground running, because they can catch up on everything in their first week on the job.

Good luck to you all 🍀 & feel free to reach out to me on LinkedIn for any questions.

FAQ:

Some nice tools to help you: Upgrow, as a central place to manage your growth process, including experiments, tasks and learnings. For inspiration for experiment ideas, you can check Growth.Design, Gleam’s Library or Ladder’s Tactic Playbook.Or have a look at this list of Growth Hacking Tools to make the execution easier.

- Set success/fail criteria to stay honest about the experiment’s outcome and learn from failure.Failed experiments lead to the best insights: Lean more into the original variant and re-evaluate scoring on other ideas.Document multiple metrics that align with the overall story you’re testing.Use one central tool that is easily searchable and understandable for your team to document growth experiments.Involve the team in hypothesizing to get buy-in and multiple perspectives.Take enough time to analyze and understand the bottleneck by interviewing customers and collecting data.

See this free Growth Experiment Spreadsheet, or have a look at these: https://growthmethod.com/growth-experiment-template/